Wise Guys on WHO Radio - January 11, 2014

The WHO Radio Wise Guys airs on WHO Radio in Des Moines, Iowa on 1040 AM or streaming online at WHORadio.com. The show airs from 1 to 2 pm Central Time on Saturday afternoons. A podcast of show highlights is also available. Leave comments and questions on the Wise Guys Facebook page or e-mail them to wiseguys@whoradio.com.

Here's what's going to kill Facebook someday

It's a normal human instinct to share what we know (or at least, what we think we know). Our very evolution as a species depended (and still depends) upon some individuals learning new things, sharing with others, and persuading those others to try the new things as well.While it's a vast overstatement to say that the advent of the Internet "changed everything", there are some specific things that it did change quite a lot, and for which those changes have no conventional rules. Sharing is one of those things.

Traveling backwards in time, prior to what are clumsily called social-networking tools, people shared new ideas via e-mail, or fax, or by photocopying and mailing things like magazine clippings. Now, it is possible to share with a single click on sites like Facebook, Twitter, Google Plus, LinkedIn, and others.

If this were all about sharing sincerely new, useful, and novel ideas, then great.

But if we're just reinforcing bad assumptions, prejudices, and the like, then it's a misuse of the tools.

If the social-media politics of the 2012 election were a preview, then the 2016 election cycle will be positively insufferable. It's much easier to call all of one's fellow countrymen idiots from the comfort and privacy of a tablet on the living-room couch or a smartphone in the doctor's waiting room than it is to say the same things to real people's faces. Everyone can see this from the receiving standpoint (who can't name a few offenders who showed up one too many times loudly repeating the wrong opinion on Facebook in 2012?), but it's much harder to recognize our own oversteps.

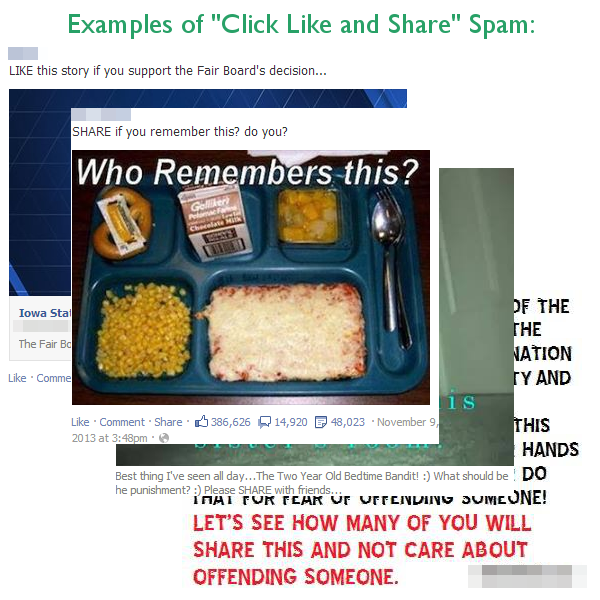

And matters have only gotten worse. Overstepping is greatly enhanced by the psychological programming (really, it's the psychology of propaganda) perpetuated by sites like Buzzfeed and Upworthy, as well as by the incredibly simple-yet-effective command to "Click like and share!" The creators of this content know what they're doing -- they're employing implicit peer pressure to get mass numbers of people to parrot their ideas without questioning.

And matters have only gotten worse. Overstepping is greatly enhanced by the psychological programming (really, it's the psychology of propaganda) perpetuated by sites like Buzzfeed and Upworthy, as well as by the incredibly simple-yet-effective command to "Click like and share!" The creators of this content know what they're doing -- they're employing implicit peer pressure to get mass numbers of people to parrot their ideas without questioning.

This is the number one threat to Facebook right now, and they don't have an easy escape. Simple sharing built the platform. The ability to share pictures took the site to a whole new level of cultural relevance. But the over-simplified sharing of pictures that we didn't create ourselves but instead mindlessly repeat on behalf of others -- the very natural blending of sharing and pictures -- well, that's going to be the death of Facebook.

If the average user has something like 250 friends, and scrolls through updates for, say, 50 of them on a given day, then they will almost undoubtedly be exposed to at least one message built around the model of "Click like and share!" But it's more likely that the average user would see more than that -- and the problem is that seeing five of those messages is probably too many, and ten or more would be just plain nauseating. But seeing ten over the course of 50 updates requires only that the average friend shares something deliberately created for the purpose of being shared just one out of every five updates, and is otherwise original 80% of the time.

The problem is that psychology is going to tell us that many of us will discount our own originality because (a) it's hard, and (b) we don't want to face the possibility that other people will reject our creativity. On the other hand, if we're just choosing to "Click like and share", we're usually confirming our own identity (reasoning that, if someone else said what we were thinking, then we must be on to something good). People seek to confirm their own identities on lots of levels -- political, religious, cultural...even just based on plain old nostalgia for when we were young.

Yet, even if we're only confirming our own identities in this comfortable, seemingly-safe way 20% of the time, we're probably sickening the friends who are trying to keep up with us.

How can Facebook stop the madness?

They're quite clearly searching (with some urgency) for a way to throttle what we see in our news feeds, with surveys asking us to rate how interested we are in reading more of certain types of posts from our friends. But changing that rate of production doesn't really overcome the evolutionary programming we all have to (a) share what we (think we) know, and (b) confirm our own identities.

They can't ban pictures, because pictures are like the proverbial horses who have already escaped the barn. Social-sharing services are never going to retreat and stop allowing people to share things; the change is always going to proceed in the direction of even more sharing (which is exemplified by Facebook's recent adoption of automatic playing of certain video files from the news feed).

Peer pressure isn't going to make people tone down the abuse of over-sharing the "Click like and share" propaganda; instead, people are much more likely to silo themselves among like-minded friends and individually block or throttle back the sharings of the acquaintances with whom they do not agree. The problem is that this just kills serendipity and leads to boredom with the platform. It's inherent to a site like Facebook, which depends upon drawing people in to see what's new, that there be something actually new and different to be seen. But when people huddle up in their Facebook cliques, they'll tend to see much less of anything that seems new. What appears new and novel to the sender will tend to seem like old hat to the receiver.

In addition, it should not escape our attention that many people thrive on the siege mentality, perceiving that they're part of some persecuted minority of opinion. That mentality breeds solidarity and cohesion, which is why it's not uncommon to hear people frame their political, religious, cultural, and other beliefs in terms of struggle against a bigger world that just doesn't "get it". The problem from a technical standpoint is that if Facebook allows users to mute the opinions they don't really want to hear (and who doesn't prefer to shut up our opponents when we're just looking for something light, breezy, and funny that one of our friends has shared?), then people will tend to find themselves isolated inside cliques of like-minded people. So like-minded, in fact, that it becomes more like a Hallelujah chorus, and that deprives the siege mentality of fuel.

At the root, really, we need friends who disagree with us, because without disagreement, nothing is really new.

Facebook has no easy way out. This trend (and, mark these words, it will accelerate from trend to death spiral quickly if they don't find an answer quickly) is a bigger long-term threat to the service than the loss of Facebook's cool cachet among younger users...and that's a big enough threat on its own.

When the history of Facebook is written, it won't be extremism or terminal uncoolness that did it in. It will be the overcrowding of the site with programmatic propaganda that bored users into its eventual abandonment.